'Radical collaboration' through machine learning

By Joe Wilensky

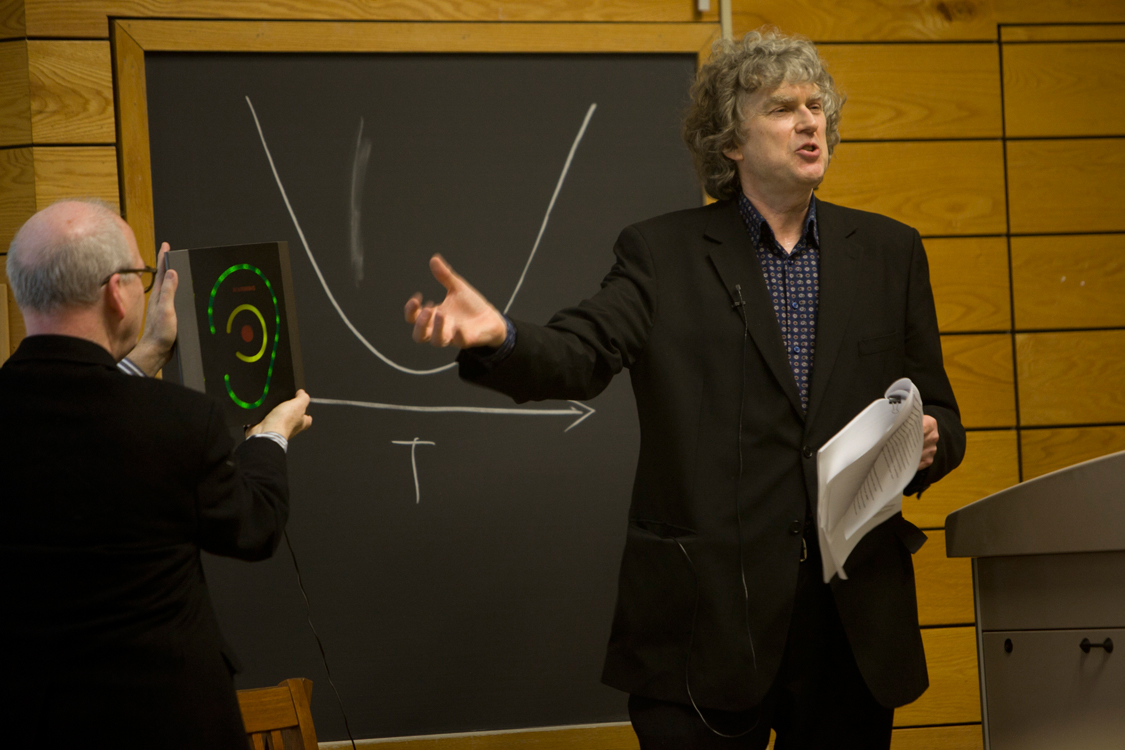

Trevor Pinch, the Goldwin Smith Professor of Science and Technology Studies, spent the fall 2016 semester on sabbatical at Cornell Tech in New York City, where he began conducting research with the Connective Media hub, which focuses on social technologies and the role of new media. It was there he began collaborating with Serge Belongie, professor of computer science at Cornell Tech, who had been working on “deep learning” research and fine-grained visual categorization.

Belongie’s research – teaching a computer to visually recognize what species a bird is or what specific type of plant or flower something is, as opposed to just recognizing that something is a plant or flower – had all been situated in the natural world. After talking with Pinch, he began to think about how to apply recognition approaches to man-made objects – in this case, musical instruments.

Pinch had an ongoing collaboration with a group at École Polytechnique Fédérale de Lausanne (EPFL) who had digitized 50 years of audio and video from the Montreux Jazz Festival in Switzerland. The performers and concerts at Montreux were all known, but not the musical instruments they used. Pinch imagined finding a way for computers to identify those instruments based on the audio recordings alone, but as he began collaborating with Belongie, he realized that exploring machine learning possibilities using visual recognition was more workable, and held more immediate promise, for applications of the technology.

Is it easier to teach computers techniques of visual recognition, rather than audio recognition?

Belongie: The machine learning community is putting a lot more effort into visual recognition. One thing that’s well-known about all these deep-learning approaches is that they require gigantic amounts of training data, and the infrastructure is well developed for people to annotate, or tag, images for these large training sets. There are certain characteristics of audio that make it trickier to annotate. For example, in the case of images, you can show an annotator 25 thumbnails on a screen all at once, and the human visual system can process them very quickly and in parallel, and you can use efficient keyboard shortcuts to label things. Imagine playing 25 audio clips at the same time – making sense of that is really hard, and it just raises the burden of annotation effort.

Pinch: So that’s why we’ve been focusing on visual. This point about tagging is very interesting, because I’m new to this whole business of computer recognition of anything. My background is in science and technology studies and sound studies. Originally I had started collaborating with that group in Switzerland; the original idea was to use that Montreux Jazz Festival collection. But then I realized, the issue there is they have lists of who the performers were at every concert, but they haven’t got lists of the instruments. So first, somebody has to basically go through and tag all the instruments in the Montreux collection before you can start to train a computer.

One of the reasons we have been able to make progress on this project is that we found a website where visual images of musical instruments are tagged already, kind of crowdsourced, and people send in videos or still images of musical instruments. And that’s the source of data we’re using to begin with as a training set.

Belongie: We identified an undergraduate who started to poke away at the project a bit, and now there’s a Ph.D. student, too, who is working on it this spring.

Does your work feel like a radical collaboration, crossing and combining the humanities with technology?

Pinch: I think this is indeed a radical collaboration. I had no idea how many advances there had been in the field of computer-based visual recognition. And it has happened pretty rapidly, it seems to me.

Belongie: The media tends to get a bit breathless whenever they’re talking about “deep learning,” that it’s as if you just push this button and it solves everything. So perhaps the radical aspect of this is that we aren’t putting deep learning on a pedestal. Instead, we’re incorporating it as a commodity in a research pipeline with many other vital components.

A lot of that excitement in the AI [artificial intelligence] community comes from engineers making gizmos for other engineers, or products that are targeted toward a geeky audience. And I think in this case, even though admittedly it’s a geeky corner of the music world, these are not computer [scientists] we’re targeting. We’re talking about archiving high-quality digital footage, and for it to be a success, it needs to enable research in the humanities. This work isn’t primarily geared toward publishing work in artificial intelligence.

Pinch: I wrote a book [“Analog Days”] about electronic music synthesizer inventor Robert Moog, Ph.D. ’65, and one of the interesting things for me was that Moog, in the foreword to my book, wrote that synthesizers are one of the most sophisticated technologies that we as humans have evolved. And actually, that’s quite an insight – thinking of a musical instrument as a piece of technology enables me to apply all sorts of ideas that I’ve been working on in the field of science and technology studies and the history of technology. Of course, pianos are mass produced, synthesizers are mass produced, and they are kind of machine-like; can this start to broaden the perspective on what is a musical instrument?

Another thing I’ve been talking to Serge about is that one of my instincts was to start this project by getting some well-known musicians and interview them about how they recognize instruments visually, how they would do this task – how does a human do this task? And it’s very interesting because that’s not the approach we’re following. I learned something from that: The computer is learning in a different way than how a human would.

And that’s a very interesting thing for a humanist to discover, how this visual recognition works. And it stretches and widens my own thinking about what musical instruments are and how we should start to think about them; maybe “musical instrument” is the wrong term, and we should start thinking about them as “sound objects” because we’re including headphones, microphones and any piece of music gear.

Belongie: And, on the flip side, computer scientists have a lot to learn about how experts in their respective fields learn. In deep learning, [we’re] still embarrassingly dependent on labeled training data. For example, if you want a machine to recognize a black-capped chickadee, you probably need to show the machine hundreds of examples of that bird under all sorts of viewing conditions. There is a lot of talk in our field about how we should move toward what is known as unsupervised learning. We know humans make extensive use of unsupervised methods, in which we learn about the world simply by grabbing things, knocking them over, breaking things – basically making mistakes and trying to recover from them.

I felt inspired to watch a live concert video recently. If you mute the performance, just turn off the sound and watch, say, a five-minute performance of Queen, it’s surreal. You don’t hear the audience, you don’t hear the incredible music, but if you look at it through the lens of a computer vision researcher, you’re actually getting a whole bunch of views of all the performers and their instruments, close up, far away, lots of different angles … it’s quite an opportunity for computer vision researchers, because you have these people on a stage exhibiting these distinct objects for several minutes at a time from all these different angles and distances. I wouldn’t dare say it should be easy, but it should be way easier than the general problem of object detection and recognition.

Pinch: For me, the computer science perspective is an incredibly radical perspective in that it’s leveling the field and making us think anew about something that, as a humanist, I’m very familiar with from one perspective – from the history of music. I’m in this project basically as an academic. If there are commercial possibilities, great, but I’m in it for the intellectual fulfillment of working on such a project and its research.

I keep remembering something I learned from Bob Moog: If you interact with people who are incredibly technically skilled in an area that you’re unfamiliar with, you can, in an open-minded way, ask smart questions and learn all sorts of interesting things.

Is there anything else you have learned about each other or how each of you views the world?

Pinch: I think we’re learning stuff all the time. One of the things I learned in an early conversation with Serge was about how computer scientists like classification and that initially, the world of musical instruments looked like it may be too messy a world because classifying instruments depends on the manufacturers, particular varieties; it’s not like scientific classification.

There’s very little logic to it other than: This is what people over time have found has worked. Computer scientists generally tend to like to work with more vigorously categorized data.

Belongie: Yes – that is largely driven by the requirements for getting work published. Everything needs a crisp, concrete label. I think it could be liberating in this collaboration if the targets for publication could just be completely outside of computer science and have different metrics of success.

Pinch: In the humanities, we have other ways of classifying – I’m very influenced by philosopher Ludwig Wittgenstein, and he had this notion of “family resemblance” in classification … so that’s how I tend to think about classification, that’s another way into it.

How might this research have real-world uses?

Pinch: This ability for a computer to visually identify musical instruments could have applications for education. It could help archivists – instead of labeling these things manually, a computer could do it, tagging instruments.

I really see the technology itself – an app, if we develop an app, or teaching the computer to do this with a piece of software – as the main product from this collaboration.

Belongie: This research is not going to replace musical archivists, but there’s a tremendous amount of power, that – if harnessed correctly – can help those people do their jobs. The ball is in my court to get some kind of preliminary results out, and then the learning will begin.

Through my work with developing the Merlin Bird Photo ID app with the Lab of Ornithology, I have witnessed firsthand the passion of the birding community and how that allows us to build up large, crowdsourced data sets and constantly stress-test the system. A big part of that collaboration was that we had such a large, highly energized fan base that was basically begging us to take their photos and analyze them.

Pinch: A project like this is kind of perfect for application and engagement of wider communities – which is one of the themes of our campuses. You can think of other areas where you could have visual recognition of gear: high-end sailing, mountaineering, other technical gear. This technology could lead to something that can be put into the hands of people and be useful.

Media Contact

Get Cornell news delivered right to your inbox.

Subscribe