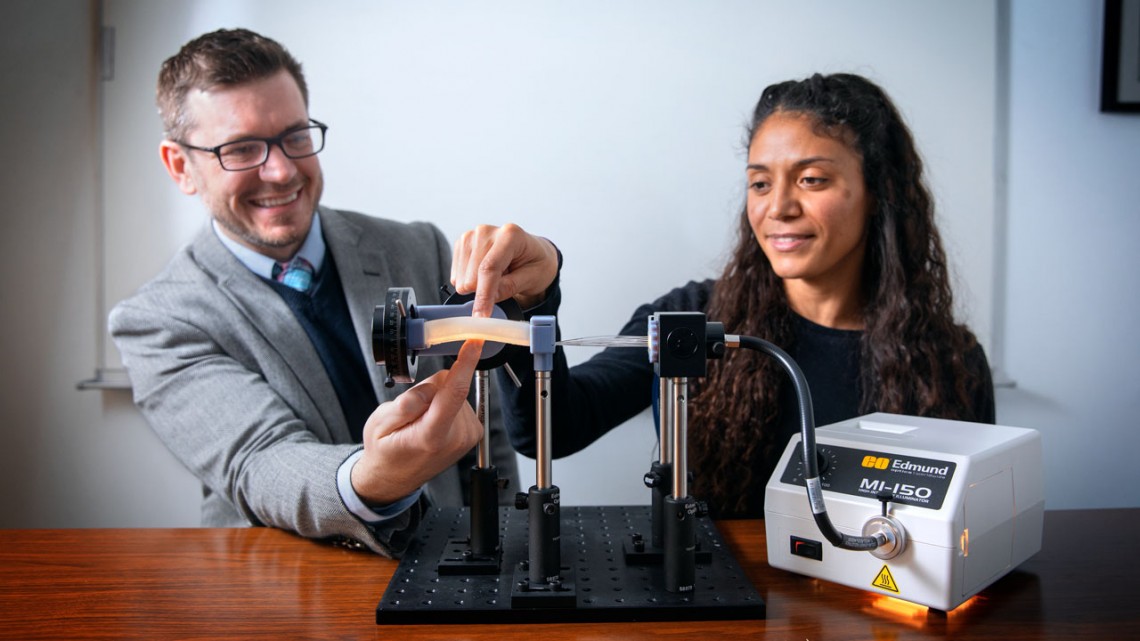

Associate professor Rob Shepherd and doctoral student Ilse van Meerbeek touch the elastomer foam containing optical fibers, a potential soft-robot technology that could be used along with machine learning to give a robot the ability to sense its orientation relative to its environment.

It’s alive: Fiber-optic sensors could help soft robots feel, adapt

By Tom Fleischman

Robots such as those used on assembly lines are really good at performing a specific task over and over. But even the best robots cannot adapt to unforeseen environmental variables.

“They’re not as good as we are” at sensing themselves relative to their surroundings, said Rob Shepherd, associate professor of mechanical and aerospace engineering. “They’re far less capable than organisms in terms of sensory measurements. … It’s the ability to feel, and respond to those feelings, that they’re missing.”

Shepherd’s latest work represents a step toward adding that missing ingredient to robots’ repertoire. Through the combination of optical fibers and machine learning, Shepherd’s group has begun to develop a robot with “awareness” of its configuration relative to its environment.

“We want to allow a soft robot to meaningfully interact with its environment, the way we do,” said Ilse van Meerbeek, doctoral student in Shepherd’s lab and lead author of “Soft Optoelectronic Sensory Foams With Proprioception,” published Nov. 28 in Science Robotics. Also contributing was Assistant Professor Chris De Sa, of the Department of Computer Science.

State-of-the-art soft robots currently employ either surface-mounted sensors to detect pressure and touch, or sensors embedded along a bendable limb to measure curvature. The ability of a robot to sense its state relative to its environment – known as proprioception – is still unexplored, but will be necessary as robots become more capable, Shepherd said.

“They’re going to have to know, with high accuracy, what their body configuration is, and what they’re interacting with,” he said.

Van Meerbeek, who has co-authored previous work on metal-foam hybrid materials, has developed a sensory system that includes an array of 30 optical fibers embedded in an elastomer foam that simultaneously transmits light into the foam and receives waves via internal reflection.

The patterns created by the diffuse reflected light are caused by different degrees of deformation – twisting and bending in multiple directions – that the robot can interpret and correct for through machine learning. Van Meerbeek employed two approaches in gathering data: single-output classification (to detect either bend or twist) and regression (to detect magnitude); and multi-output regression (to detect bending and twisting simultaneously).

Multiple machine-learning techniques, incorporated into the study with help from De Sa, were used to predict – some with 100 percent accuracy – the magnitude of the deformation type.

“We showed in this work that we could detect both bending and twisting simultaneously using this array of optical fibers,” van Meerbeek said, noting that they confirmed the robustness of their machine learning model by systematically removing data from up to 20 of the 30 optical fibers.

And although their deformation classifying was limited to just four modes – bending in two directions and twisting in two directions – the group believes its method could be used for more types of deformation.

Future work will address, among other things, the issue of drift: The optical fibers are embedded in a stretchable matrix, and eventually the fibers will become dislodged or change their position. “You train them in one configuration and they move to another,” Shepherd said, “so how accurate will the model be then?”

Shepherd says they are taking soft robotics in a new direction.

“It’s just a different way to make robots,” he said. “We don’t have to assemble robots from the top down, as is now done with bolts and hinges. We can mold and grow them from the bottom up, with sensors distributed throughout their interstices and actuators installed throughout the body. That’s much more like an organism than a robot.”

This work was supported by grants from Air Force Office of Scientific Research, the National Science Foundation Graduate Research Fellowship Program and the Alfred P. Sloan Foundation.

Media Contact

Get Cornell news delivered right to your inbox.

Subscribe