Scientists simulate clothing sounds for computer animation

By Bill Steele

Someday, virtual reality may be so well done that you won't be able to tell it's just computer animation. To make that happen we'll have to hear it as well as see it, from dramatic noises like the sound of a breaking glass to subtle details like the rustling of clothing as characters move.

Cornell researchers who specialize in creating sound for computer animation are adding the sounds of cloth to their repertoire. Doug James and Steve Marschner, associate professors of computer science, and graduate student Steven An reported their method of synthesizing cloth sounds at the SIGGRAPH conference Aug. 7 in Los Angeles.

Soundtracks for animated movies are still mostly constructed by hand, manually matching recorded sounds to the action. But it's not always possible to find appropriate sounds, and in interactive virtual environments you won't always know ahead of time what the action will be. One solution is to synthesize sound based on the physics of what's happening in a scene.

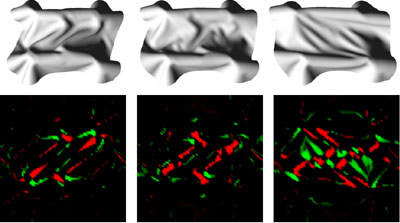

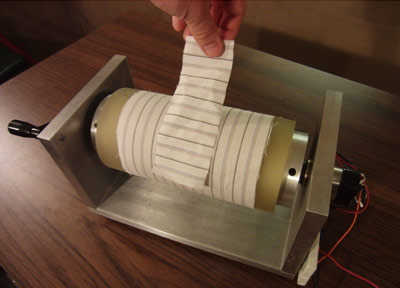

The Cornell researchers built a system that uses digitally synthesized sound as a sort of roadmap to insert real recorded sounds. They synthesize an approximate sound based on two characteristics of the action: friction, as the cloth moves against itself or other objects, and crumpling, as the cloth deforms. To teach their system to synthesize these sounds, the researchers made controlled recordings of friction sounds by spinning a cloth-covered roller while holding a piece of cloth against it, and crumpling sounds by manipulating a piece of cloth while avoiding any sliding contact.

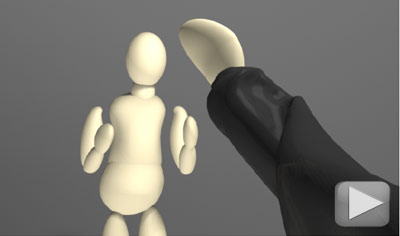

But the computer-synthesized sounds still aren't very realistic, so the "target" sound is broken up into microsecond chunks, which the computer matches against a database of similar chunks of recorded real cloth sounds which are then reassembled smoothly to produce a convincing soundtrack. Binaural recordings were included to produce "first-person" sounds for a viewer wearing stereo headphones.

A human sound designer oversees the process, choosing the type of fabric and tweaking the rules by which sounds are matched.

In part, James said, the approach was inspired by speech synthesis. "People thought they could synthesize speech using oscillator models," James explained, "but it didn't sound realistic, sort of like 'Speak & Spell,' so they ended up concatenating bits of recorded human speech for realism."

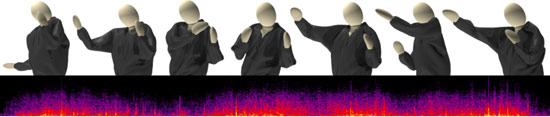

As demonstrations, the researchers created the sounds of cotton and polyester sheets being spread over a couch, a person exercising in a nylon windbreaker and corduroy pants, and the same person boxing from a first-person viewpoint.

The computation is still time-consuming, so it's not ready for real-time virtual environments yet. Getting the ideal sound still involves trial and error, the researchers reported. "This is a proof of concept," James said. "One next step would be optimizing the method to make it run in real time."

Practical applications may require a more elaborate database of cloth sounds, and still to be added are impact and tension sounds like those of a flag whipping in the wind.

The research was supported by the National Science Foundation, fellowships from the Alfred P. Sloan Foundation and the John Simon Guggenheim Memorial Foundation, and donations from Pixar and Autodesk.

Media Contact

Get Cornell news delivered right to your inbox.

Subscribe