New image sensors could lead to focusing photos after they're taken

By Anne Ju

At the heart of digital photography is a chip called an image sensor that captures a map of the intensity of the light as it comes through the lens and converts it to an electronic signal.

Using new computational methods and a tried-and-true chip-making process, Cornell scientists are taking this technology many steps further. Led by Alyosha Molnar, assistant professor of electrical and computer engineering, they are building image sensors that give detailed readouts of not only the intensity, but also the incident angle of light as it strikes the sensor.

The result could be the next generation of 3-D cameras, as well as the ability to focus photos after they're taken.

"If I want to find something in 3-D space, if I just measure the amount of light hitting different locations, I know a little bit. I'll get some sort of blurry blob," Molnar said. "But if I know the incident angle of the light, I can triangulate back. So the question is how do you measure that?"

Molnar's solution, which he invented with graduate student Albert Wang, is a uniquely designed pixel for a standard CMOS (complementary metal-oxide semiconductor) image sensor. Each 7-micron pixel, an array of which is fabricated on a CMOS chip, is made of a photodiode under a pair of stacked diffraction gratings.

Shine a light at the pixel, and the top grating allows some light through, creating a diffraction pattern. Changing the incident angle of light changes how this pattern interacts with the second grating, changing how much light gets through to the diode below. In other words, the first grating generates patterns, while the second analyzes the patterns. All this can be done in standard CMOS manufacturing process -- at no added cost.

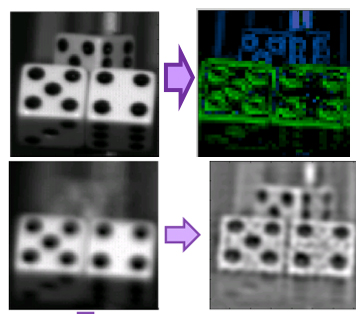

The innovative design lets the chip detect information about the incident angle of the light striking it with more detail than a normal CMOS imager. All the pixels, each of which record a slightly different angle, produce an image containing both angular and spatial information. This information can be analyzed through the use of a mathematical function called the Fourier Transform, extracting both the depth of objects in an image, as well as allowing for computational refocusing, or after-the-fact focusing, of the image taken.

Proving this concept of image capture using angel-sensitive pixels has spawned many side projects in Molnar's lab, including a lensless microcamera that spits out two-dimensional (albeit blurry) images.

So far, with the help of a standard Nikon camera lens, their chip can capture an image at 150,000 pixels -- less than the 1 megapixel by today's camera standards, but this can be improved by building larger chips.

Molnar's technology has been making waves in the electronics, image processing and integrated circuits fields. He shared the most updated design of an angle-sensitive pixel for image capture at the IEEE International Electron Devices Meeting in Washington, D.C., late last year, and he detailed the math behind the device at an international optics and photonics (SPIE) conference in January in San Francisco.

Most recently, he and Wang won the Lewis Award for best paper from the International Solid-State Circuits Conference, Feb. 19-23, for their paper that details the new image-sensor technology.

The company Lytro is marketing a camera that employs similar light-field technology, but Molnar thinks Cornell's technology may prove better in many applications. Molnar's methods provide angular information in a pre-compressed format and allow for depth mapping with minimal computation. This could be especially good for video, which generates very large files, he said.

Media Contact

Get Cornell news delivered right to your inbox.

Subscribe