‘A completely different game’: Faculty, students harness AI in the classroom

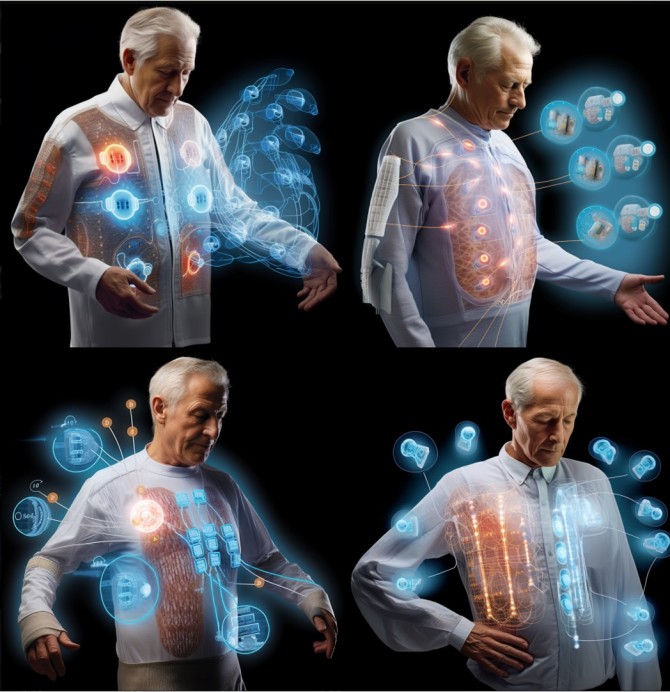

Grace Honeyman ’26 describes her final project, made with AI, for Juan Hinestroza’s class “Textiles, Apparel and Innovation Design” in fall 2023.

‘A completely different game’: Faculty, students harness AI in the classroom

By Susan Kelley, Cornell Chronicle

For 15 years, Professor Juan Hinestroza had been teaching his course on innovative textiles essentially the same way. But last fall, he changed it up, requiring his students to use generative AI.

In the past, the final project took a five-student team two months to finish. Last semester, each student working alone with AI did it in two weeks – with superior results.

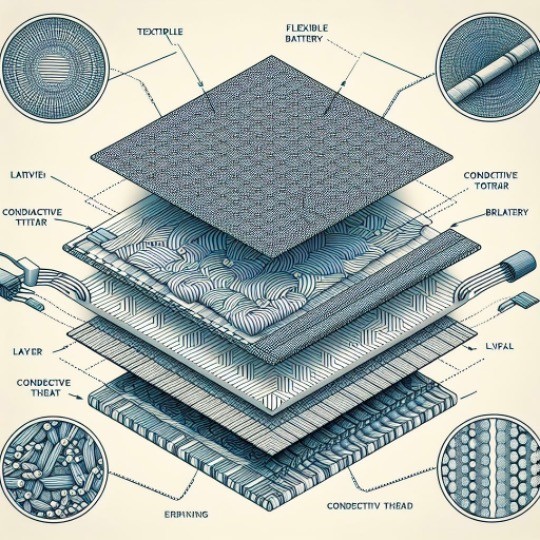

Documenting their progress with blog posts, the students used AI tools to summarize research papers, then used that information to update an existing design that applies innovative textiles to a garment or object to solve a real-world problem. Some improved gloves that ease arthritis. Others updated shoes that convert the wearer’s movement to energy that warms the feet of people with diabetes. They also used the tools to create images of their designs. For the final research posters, they used only AI for imagery, text and references.

“AI really liberated them to dig deeper. It’s like a calculator: You can spend your time doing your calculations by hand. But if you have a calculator, then you can spend more time doing something else,” said Hinestroza, the Rebecca Q. Morgan ’60 Professor of Fiber Science and Apparel Design in the College of Human Ecology (CHE).

He is one of many faculty members across Cornell’s colleges and disciplines who are embracing AI’s capabilities and limitations in their classrooms.

To be sure, some faculty members do not allow the use of AI in their courses; a university committee initiated by Provost Michael I. Kotlikoff offered faculty guidance on the use of AI in the classroom in fall 2023.

“I tell my colleagues, especially those who are opposed to these tools, that you cannot teach the same way you were taught. Because it’s a completely different game,” Hinestroza said. “The reality is that these tools are being used by companies. They’re being used by other universities. So you have to train the students for the real world. The world that we as faculty members think exists – it doesn’t exist anymore.”

Hinestroza is one of five winners of the 2024 Teaching Innovation Awards (see sidebar). They will discuss their approaches at the Provost’s Teaching Innovation Showcase: Creative Responses to Generative AI, on April 11.

“The award winners, and other applicants as well, represent a wide and impressive range of responses to the new challenges and opportunities associated with generative AI in the classroom,” said Steven Jackson, vice provost for academic innovation. “They provide more great evidence of the skill and imagination of Cornell teachers in responding to ongoing changes in the teaching environment.”

‘We’re going to experiment’

Grace Honeyman ’26 had minimal experience with AI prior to taking Hinestroza’s course, “Textiles, Apparel and Innovation Design.” She had never even opened a ChatGPT account on her computer.

The course introduced her and other students to AI tools that can create images and interpret scientific literature, including ChatGPT, Midjourney, BingChat, Claude.ai, DALL-E, Jasper.ai and Adobe’s Firefly and Sensei. “I told them, ‘I’m learning as you are. And we’re going to experiment,’” Hinestroza said. “The students were incredibly patient and played along as we made mistakes and found ways to optimize the use of tools.”

For her final project, Honeyman redesigned a medical undershirt, which reads the vital signs of people with congestive heart failure, to include a piezoelectric nanogenerator that converts the kinetic energy of the wearer’s movement into electrical energy within the textile, eliminating the need for a bulky battery pack.

She fed a series of prompts into Midjourney and Bing.AI, which eventually created images that matched what she had in mind. “I don’t have time to do a five-hour Photoshop tutorial and put together a schematic of what my textile looks like,” she said. “Doing that on DALL-E or Midjourney take five or 10 minutes, depending on how long it takes you to type in your prompt.”

That gave her more time to research how to update the technology, textile applications and intended use. “A lot of what people are missing is that students start with an image in our minds,” she said. “It’s not really all being done by AI – we still have to use our creativity.”

And they had to watch out for the tools’ mistakes. Sometimes AI creates images of a hand, for example, that has only three fingers, or “hallucinates” research papers that don’t exist.

“Honestly, being very, very critical of all this technology is one of the most important skills to learn and one of the most important things I did learn from this class,” Honeyman said.

‘The genie is out of the bottle’

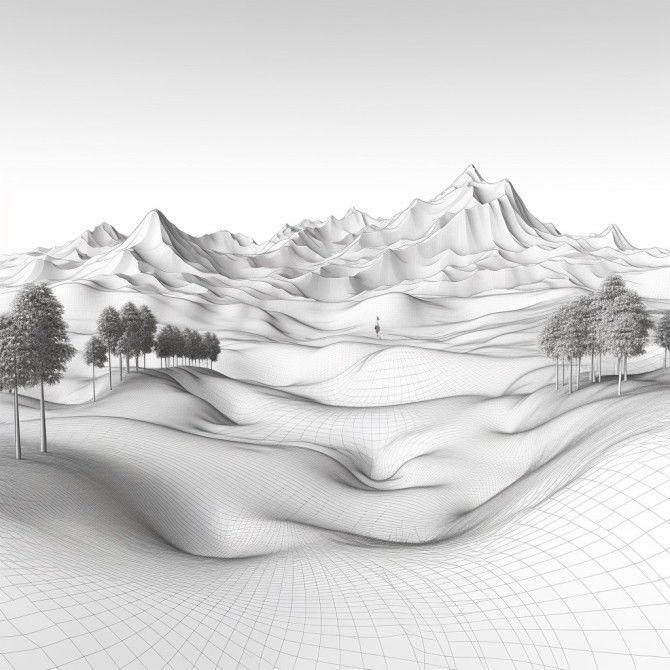

A few major AI image-generating tools were released about a month before Jennifer Birkeland, assistant professor of landscape architecture in the College of Agriculture and Life Sciences, started teaching her course on graphic communication.

And she had heard many professionals in landscape architecture were using them already, so she started playing around with the tools herself. “I was like, ‘Oh, this is really weird and interesting. This is a really critical tool. I need to incorporate this somehow into my class,’” she said.

Her students wrote a series of prompts to make the tools create an image that they’d work with for the rest of the semester. They used Rhinoceros 3D, a modeling software, to create 3D models and then cross-sections of the object, and further iterations through the traditional design process.

She aimed to teach students to think critically and become AI literate. “It’s two-sided,” Birkeland said. “Yes, AI is cool and smart, but it’s also dumb.”

For example, she asked students to use one prompt with different AI tools and compare the results. The exercise demonstrated that each tool draws from a different library of data to generate images – and often include racial and gender biases. “I asked, ‘Did you get only men in this one? Or did you only get white men, versus another tool that might have had something else?’” Birkeland said.

The tools are helping Matthew Sprague, MLA ’26, learn to recognize good design, he said. The images AI tools create are “pretty peculiar and strange-looking, mostly,” he said. “It makes you think about style and what visually works or doesn’t. And you can identify some of that in your own work. You need to have some design skills to take that and make it look right.”

The tools have other limitations. For example, they wouldn’t be able to do assignments for his main studio class, Sprague said. “If I tried to tell it to make those drawings, it wouldn’t have any clue what I was talking about, especially with architectural drawings that need to be precise. It’s not there yet.”

But the tools do level the playing field for students who don’t have a fine-art background, Birkeland said. “People who don’t draw are now able to generate these images, and then use them as references to show people what they’re envisioning.”

Given the increasing use of AI, instructors have a responsibility to teach students how to use it, Birkeland said. “Whether we like it or not, it’s not going away – not at this point. The genie is out of the bottle.”

Transformative change

In the government class “America Confronts the World,” students treated large language models like ChatGPT as interlocutors that supported, rather than substituted for, original writing.

“After attending Center for Teaching Innovation workshops and consulting instructor reflections, we implemented a two-pronged approach that required responsible yet creative student engagement with AI,” said Peter Katzenstein, the Walter S. Carpenter, Jr. Professor of International Studies in the College of Arts and Sciences. He collaborated with his teaching assistants – doctoral candidates Amelia C. Arsenault, M.A. ’23, and Musckaan Chauhan, M.A. ’23 – to integrate AI into the classwork.

“This is a tool that students are using already, and it’s probably not going away,” said Arsenault, whose research focuses on surveillance technologies, which rely heavily on AI. “We thought this would be an opportunity for us to teach them how to use it in a way that was actually most useful for them.”

The course focuses on the wide range of views at play in American politics and foreign policy. Four written assignments integrated AI, while four had non-AI prompts.

In one assignment, students wrote an essay based on class readings and then brainstormed an objecting argument; in another, they fed their essay into an AI tool and asked it to come up with an objecting argument that they then counterargued to strengthen their thesis. Throughout the course, the students did reflections on their experiences with AI.

“The students appreciated that we were willing to deal with it in some way, shape or form,” Arsenault said.

Esteban Lau ’25, a government major in A&S, was surprised to find that when he prompted the AI tool to counter his essay, it argued for his point instead. Like other students, he found he had to try several different prompts to get the result he wanted. “I guess that comes down to what people call ‘prompt engineering.’ I’m actually getting better at using the AI tool,” he said.

“But at the same time, I think there’s a lot of value in not using them and developing your own analytical thought,” he said. “And it’s a difficult balance to strike because, you know, some students do use AI writing as a crutch, and they rely on it too heavily. And I think that impacts their education.”

Increasingly there are tools that purport to identify when a student has cheated and used an AI tool to write their essay, but they are highly unreliable, Arsenault said. “Rather than getting yourself in that position where you have to make very difficult, probably impossible decisions about what is and what is not generated by ChatGPT, we can put up parameters about how we would like to see it used in the class. The goal is, the students will learn real skills, and hopefully take those forward with them as they enter the workplace.”

Katzenstein thinks of AI as transformative rather than marginal change, he said. “Students will have to find their way in this world while writing, as a basic cultural technology, will fundamentally change.”

Media Contact

Get Cornell news delivered right to your inbox.

Subscribe