Crowdsourcing creates a database of surfaces

By Bill Steele

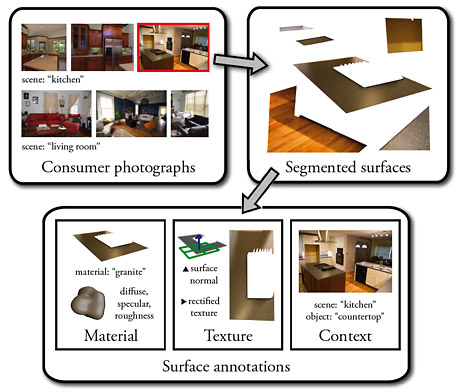

Computer graphics are moving off the movie screen and into everyday life. Home remodeling specialists, for example, may soon be able to to show you how your kitchen would look with marble countertops or stainless steel appliances. To do this, computers have to be able to recognize and simulate common materials; so Cornell researchers have drawn on uniquely human skills to build a database of surfaces computers can work with.

OpenSurfaces offers more than 25,000 annotated images that may be used by architects, designers and home remodelers in visualizing their work, and could be a rich source for computer graphics and computer vision researchers looking for ways to recognize materials or synthesize images of them. Of particular value, the researchers said, is that the images were collected from the real world – the Flickr photo-sharing site – rather than sterile laboratory samples. “This catches real materials that show up in the world, including wear and tear and weathering,” said Kavita Bala, associate professor of computer science. “One of the things missing in computer graphics is the realism of normal life.”

The crowdsourcing user interface the researchers developed to teach a team of human reviewers to find and describe the images also represents a valuable contribution to the field. “People are very good at recognizing materials but very bad at communicating the information,” Bala said.

Bala, along with Noah Snavely, assistant professor of computer science, and graduate students Sean Bell and Paul Upchurch, described their work at the 2013 SIGGRAPH conference, July 21-25 in Anaheim, Calif.

“First I want to understand how human beings perceive materials,” Bala explained. “Then I want to create computer vision algorithms that recognize materials, and computer graphics algorithms that can produce images of those materials that have all the subtle features.”

The researchers began by collecting about 100,000 images from Flickr. With home remodeling applications in mind, they looked for tags like “kitchen,” “bedroom” or “living room.” Then they turned to the Amazon Mechanical Turk (MTurk) service, which enables employers to hire online workers to perform tasks that computers are unable to do. They eventually built a workforce of about 2,000 people all over the world to select surfaces displayed in the photos, identify the material in the selection and add comments on the context and how the surface reflected light.

Getting the user interface right was the big challenge, Bala reported. “Given language barriers and cultural differences, you pretty much have to bulletproof the task,” she said. “Drywall,“ for instance, describes many walls in the United States, but workers in other countries might enter “concrete“ or “plaster.“ Eventually the researchers decided to let workers select from a drop-down list rather than entering free-form descriptions. Other properties like shininess and roughness were reported using sliders.

“Using perceptual language works better than graphics terminology,” Bala explained. “Before we refined the design we were spending a lot of money because we couldn't get good data.” At first, she said, it was costing about $3 for each material added to the database, but eventually the cost got down to 10 cents each.

Now in the works, Bala said, is an application to modify images by changing one material into another. Instead of just looking at pictures in a catalog, take a photo of your own kitchen and see how it would look with new countertops. Down the line, she added, devices like Google Glass might draw on the database to identify materials in the field.

The research was supported by the National Science Foundation, the Intel Science and Technology Center for Visual Computing and Amazon Web Services in Education.

Media Contact

Get Cornell news delivered right to your inbox.

Subscribe